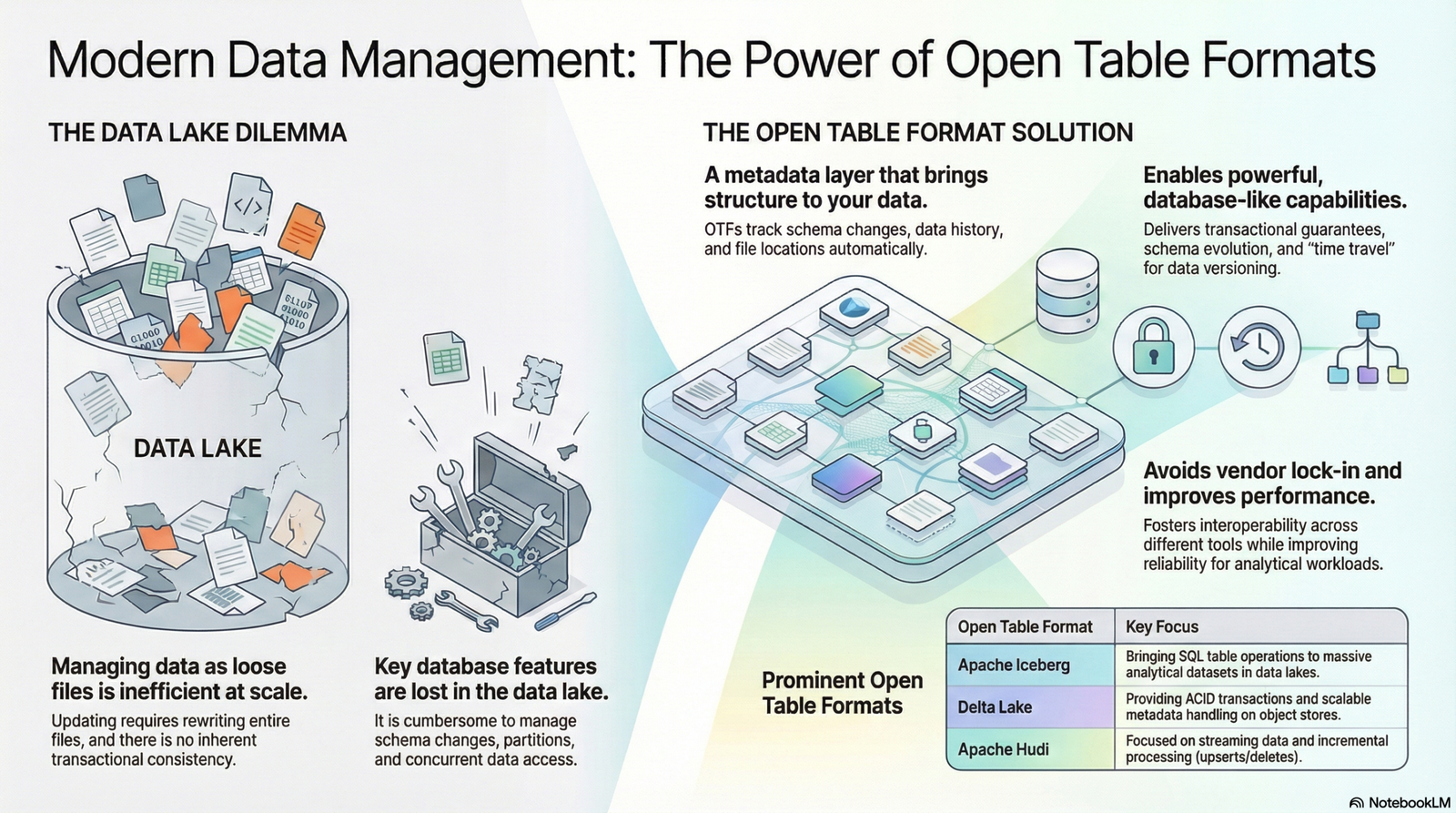

Introduction to Delta Lake Format

The Delta Lake format is a powerful data storage format that combines the reliability of ACID transactions, schema enforcement, and data versioning for big data workloads. It is designed to address common data lake challenges such as data quality, data reliability, and data lifecycle management.

Reading Delta Lake Format in AWS Glue

AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it easy to prepare and load data for analytics. It supports various data formats, including the Delta Lake format.

To read the Delta Lake format in AWS Glue, follow these steps:

- Set up an AWS Glue job in the AWS Glue Studio.

- Specify the Delta Lake format as the data source in the job configuration.

- Configure the job to read the Delta Lake format using the appropriate input options.

- Run the AWS Glue job to read the data from the Delta Lake format.

Step-by-Step Guide

1. Set up an AWS Glue job:

Log in to the AWS Management Console and navigate to the AWS Glue service. Click on ‘ETL Jobs’ in the navigation pane and then click on ‘Author code with a script editor’, choose engine as ‘Spark’ then hit ‘Create Script’.

2. Specify the Delta Lake format as the data source:

In the ‘Job details’ section, provide a name for the job and select the ‘IAM Role’. Under ‘Job parameters’, choose ‘–datalake-formats’ as key and value as ‘delta’. Create another key named ‘–conf’ and set it to the following value.

spark.sql.extensions=io.delta.sql.DeltaSparkSessionExtension --conf spark.sql.catalog.spark_catalog=org.apache.spark.sql.delta.catalog.DeltaCatalog --conf spark.delta.logStore.class=org.apache.spark.sql.delta.storage.S3SingleDriverLogStore

3. Configure the job script to read the Delta Lake format via glue libraries or using spark dataframe :

Using Glue libraries :

import sys from awsglue.transforms import * from awsglue.utils import getResolvedOptions from pyspark.context import SparkContext from awsglue.context import GlueContext from awsglue.job import Job ## @params: [JOB_NAME] args = getResolvedOptions(sys.argv, ['JOB_NAME']) sc = SparkContext() glueContext = GlueContext(sc) spark = glueContext.spark_session job = Job(glueContext) job.init(args['JOB_NAME'], args) df = glueContext.create_data_frame.from_catalog( database="<your_database_name>", table_name="<your_table_name>", additional_options=additional_options ) df.show() job.commit()

Using the Spark dataframe :

deltaDF = spark.read.format("delta").load("<s3path for delta table>")

deltaDF.show()

4. Run the AWS Glue job:

Click on ‘Save’ to proceed to the ‘Job details’ section. Review and modify any necessary job parameters, such as the number of concurrent workers and the maximum capacity.

Finally, click on ‘Run job’ to start reading the data from the S3 DeltaLake.

Conclusion

The Delta Lake format in AWS Glue provides a reliable and efficient way to read and process big data workloads. By following the step-by-step guide mentioned above, you can easily set up an AWS Glue job to read the Delta Lake format and leverage its benefits for your data analytics needs.